The Contentful SEO guide

Technical SEO elements

Joshua Lohr, Rob Remington, Nick Switzer, Allan White

Updated: December 6, 2023

The Contentful SEO guide

This is chapter 5 of the series,The Contentful SEO guide.

Summary

“Technical SEO” is an area of responsibility that spans both your content and your code. It is where your structured content is transformed into machine-readable markup that search engines can “spider” effectively and accurately. This is achieved through the design, field validations, and clear coordination between your content model in Contentful and your web application.

Understanding the necessary outputs, structures, and best practices that search engines expect is essential for success in your overall SEO efforts. Common roles involved are content modelers (often admins), frontend developers, and devops or engineers responsible for site generation and delivery. One design goal is to keep the editorial experience as simple as possible for editorial teams; content creators shouldn’t have to understand these highly technical, specialist subjects to do their jobs.

In this chapter, we’ll review the most common technical elements required, and examine how your content models can be designed in Contentful to support these elements.

XML sitemap

XML sitemaps are structured data files that live on your site that help search engines index and understand your content. Google describes XML sitemaps as:

A file where you provide information about the pages, videos, and other files on your site, and the relationships between them. Search engines like Google read this file to crawl your site more efficiently. A sitemap tells Google which pages and files you think are important in your site, and also provides valuable information about these files. For example, when the page was last updated and any alternate language versions of the page. You can use a sitemap to provide information about specific types of content on your pages, including video, image, and news content.

These sitemaps help search engines maintain a site’s placement in search results and discover new and refreshed content. XML sitemap files must contain all indexable pages, have no errors or redirects, and be deployed at the domain’s root. If you have a large, complex site, or sections that are managed by different teams with different kinds of content, using a sitemap index file will let you manage multiple sitemap files.

Optimization & validation tips

- The XML sitemap file (or XML sitemap index file) should be deployed at domain root:

https://www.website.com/sitemap.xml - The XML sitemap should be as current as possible, auto-generated when new content is published or any metadata in the index is changed (such as title, description, or URL).

- The XML sitemap file should contain all indexable pages.

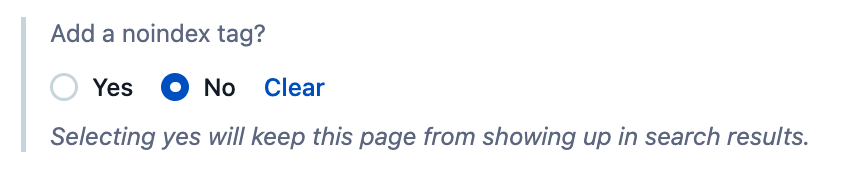

- If a page is set to “noindex” with the meta robots page-level element, then the URL should be automatically excluded from the XML sitemap.

- If a page is set to redirect using (301 or 302 status codes) or returns an error (4xx or 5xx status codes), then the URL should be automatically excluded from the XML sitemap.

- Omit canonicalized URLs. If a page is “canonicalized,” meaning the page specifies a canonical link element different from the page’s URL, then the URL should be automatically excluded from the XML sitemap. These pages will already be included through their natural path. This will also prevent the addition of URLs that are canonicalized to other domains.

Robots directives

We’re about to talk about robots, specifically robot.txt and meta robots tags. Both of these set controls for and give directives to bots (also known as search engine spiders) on how they behave on your website and subsequently index –– or don’t index –– your site’s content. They both have unique sets of controls and use cases, and can be used in conjunction to set strong signals about how you desire a bot to behave on your website, but, more importantly, should be thought of independently. Let’s dive into both.

Robots.txt

While not managed in Contentful, the crucial robots.txt file tells search engine crawlers what to crawl and what not to crawl by using directives instructing them on how to behave on your site, which can have a significant impact on content accessibility and visibility in organic search results. Additionally, the robots.txt file tells crawlers where to find the XML sitemap(s).

Directives can include:

- instructions on how to index or not index site pages and folders,

- which user agents (crawlers) can or can’t access, and

- a path to the XML sitemap.

Optimization tips

- Create and deploy robots.txt file at root domain, e.g.,

https://www.website.com/robots.txt - Recommend using the default directive allowing access to all crawlers to crawl the site:

User-agent: *

Allow: /

- You may want to limit access to certain crawlers, but many crawlers will simply ignore this directive and crawl regardless or cloak themselves as googlebot, so it’s really not worth trying to control them with this method.

- Include a path to your XML sitemap(s) in this format:

Sitemap: https://www.example.com/sitemap.xml

Sitemap: https://www.example.com/sitemap-2.xml

- These days, there’s little need to block much of anything, and you should avoid unnecessary directives. If you need to remove or block a page from Google’s index, it’s best to use the meta robots tag.

- Avoid “hiding” pages, folders, or admin paths using “disallow” directives as this can be a security and competitive risk as anyone can access this file and see these “hidden” paths. Check your competitor’s robots.txt files and see what they are “hiding.” It's fun, and can be enlightening!

- Do not block asset folders such as JavaScript or CSS folders using “disallow” directives, per Google’s guidance, as search engines need these files to properly render this page. Blocking these assets can result in your content being improperly rendered and even considered as not mobile-friendly (since viewports rely on CSS), which can be a very damaging signal to visibility and ranking ability in search results.

Google Search Console & Bing/Baidu/Yandex Webmaster Tools verification

There are a few ways to verify Google Search Console and the other Webmaster Tools accounts to show that you are indeed the owner of the website, which of course provides super valuable access to organic search analytics, indexation controls and insights, visibility warnings and errors, and more.

One of the ways you can verify this is through adding a meta tag to your site’s <head>, which will look something like this:

<meta name="google-site-verification" content="......." />

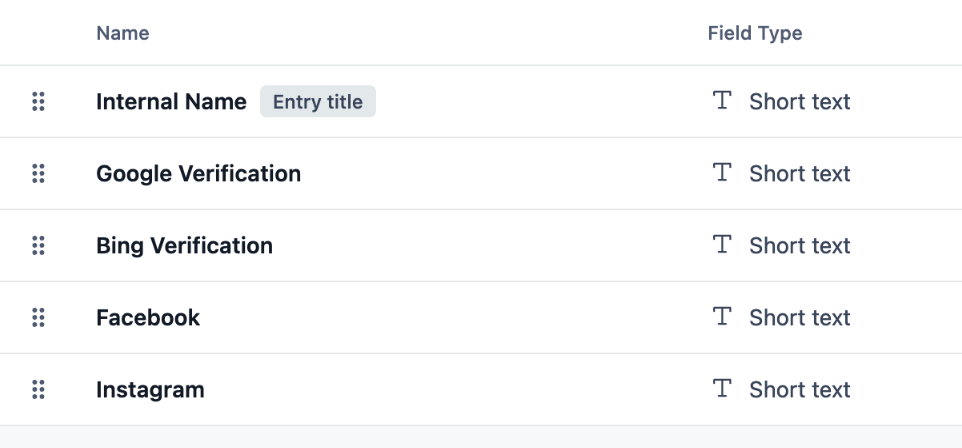

This is often handled in your site code, but you can also manage these in Contentful such as part of a Site Settings content type that contains fields used globally by your website. These could include contact and social media information, along with fields for each platform you want to verify.

Up next

Next, we’ll talk about SEO best practices for URLs and redirects, including details around URL slugs, canonical link elements, breadcrumbs, and redirect management.

Written by

Joshua Lohr

Senior SEO Manager

Josh is the SEO Lead at Contentful. With 15 years of experience working directly in SEO for global brands and agencies, he gets his kicks playing a variety of instruments and appreciating the nature of his adopted home in Scotland.

Rob Remington

Practice Architect

Rob Remington is a Practice Architect at Contentful, where he collaborates across all areas of the company to develop our recommended ways of building with Contentful, and ensure our technical teams have the resources they need to help our customers succeed.

Nick Switzer

Senior Solution Engineer

Nick is a technical people-person who lives for solving business problems with technology. He is a solution engineer at Contentful with 14 years of experience in the CMS space and a background in enterprise web development.

Allan White

Senior Solution Engineer

Allan White is a Senior Enterprise Solutions Engineer at Contentful. A former Contentful customer, he has 25 years of experience in design, video, UX and web development. You can catch him on Twitter or in the Contentful Slack Community.